a tribute to Ryuichi Sakamoto with pent @ The Lot Radio 04-16-2023

Caught a replay of this mix on The Lot Radio this afternoon and noting it here for the archive :)

Caught a replay of this mix on The Lot Radio this afternoon and noting it here for the archive :)

I’ve been trying to use social media less and this site more. The hope is to keep a more thorough, short-form log of stuff I’ve read, watched, made, eaten, thought about, etc. However, since the site is a Jeykll-backed static site, I previously had to be at my laptop to post a new entry.

While there are more sophisticated options for managing Jekyll sites, I really just wanted to simple webform to post a new entry which I could access from my phone.

After a few hours of hacking, I put together a simple FastAPI endpoint which performs the following operations:

Since this site uses GitLab CI to publish new changes, the new post shows up in 1-2 minutes.

Some things I’d like to add in the future:

Here are links to the form and the source code.

Watched Pedro Almodovar’s Women on the Verge of a Nervous Breakdown. Enjoyed the intro sequence with Ivan’s voiceover teamed with the fact that he only interacts with Pepa via voice messages (including their shared work dubbing English films in Spanish) until the end of the film. It’s as if some men treat relationships as nothing more than a series of cliché lines cribbed from pop culture. I saw Almodovar’s recent short with Tilda Swinton, The Human Voice, earlier this year and it clearly drew upon this earlier film, including the vibrant palette of reds, greens, and blues.

Watched Marty last night, a low-key story about a lonely butcher in the Bronx. I loved the back-and-forth between the title character and his friend trying to figure out what to do on a Saturday night, the characters of his mother and aunt, and this line:

Ma, whaddaya want from me? Whaddaya want from me? I’m miserable enough as it is. All right, so I’ll go to the Stardust Ballroom. I’ll put on a blue suit, and I’ll go. And you know what I’m gonna get for my trouble? Heartache. A big night of heartache.

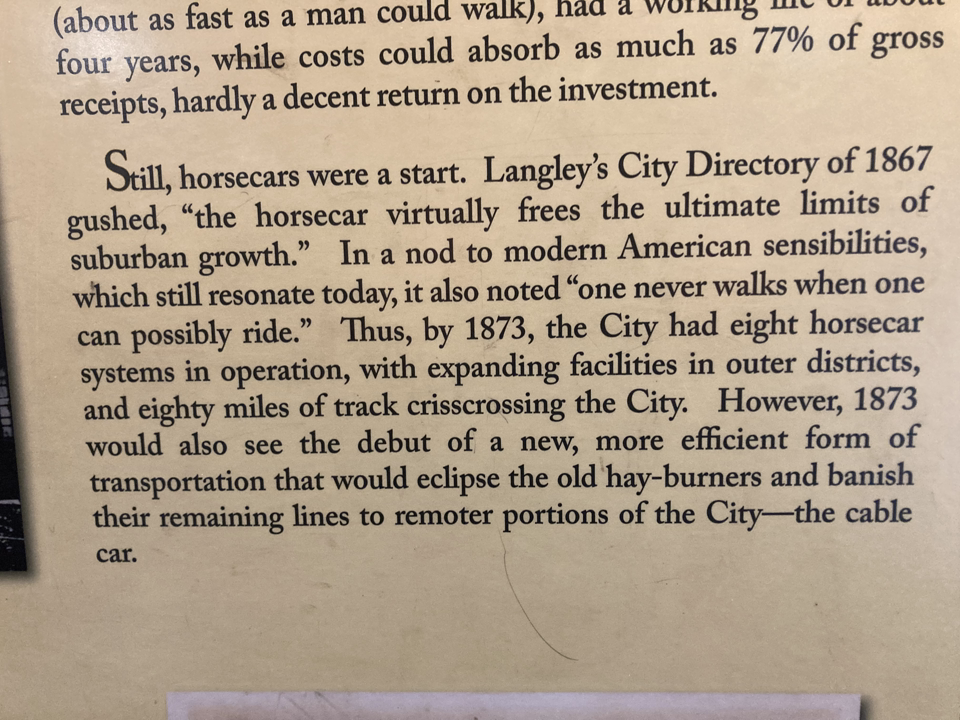

Quote from “Langley’s City Directory” about commuting in San Francisco circa 1867. At the Cable Car Museum.

I initially came across this article through a citation in Software Sustainability, a collection of articles on the topic of “Green in Software” - generally how to make software more sustainable by reducing its carbon footprint. The article referenced “Wirth’s Law” which is:

Software is getting slower more rapidly than hardware becomes faster.

This can be contrasted with “Moore’s Law”, the better-known observation that the complexity of computers doubles every two years.

Together, these two “laws” form “Jevon’s Paradox” as appled to computing, in which increases in the energy efficiency of computers (defined as the proportion of processing power outputted to energy inputted) have lowered costs and thereby increased demand, negating the efficiency gains. This paradox was initially observed after Watt’s steam engine, which made coal more cost-effective, led to the increased use of steam engines, and therefore coal, as well.

Been having fun making drones with my electric toothbrush. Opening and closing your mouth while using it functions kind of like an LFO/low pass filter.

Sitting in the seat of empire tonight, I rest comfortably in my quiet home, under the soft glow of dimmed lights, listening to music, cuddling my cat, drinking fresh water, and staring at a flat plane of glass informing me of a genocide in progress. Like many sitting in the the seat of empire tonight, this feeling is all too familiar. The window into this atrocity is smaller, the voices reporting it are those of my friends, the pleas to politicans are automated, but the sense of powerlessness remains.

I scroll through social media until my device tells me I’ve reached my daily limit. I call my representatives and Cisco Systems tells me their inboxes are full. I make donations which my credit card flags as fraud. I march in the street and am surrounded by police in riot gear. The systems of power which secure my comfort, which provide me with food and water and entertainment, also prevent me from taking meaningful action. The systems of power which secure my comfort are committing genocide on my behalf, without my consent.

What is there to do? What can be done? I tell myself to not give up hope, to not look away, to follow the guides and click the links in the Google Docs. I dream of walking door-to-door, like some trick-or-treater dressed up as Thomas Jefferson, pleading with my neighbors to “Free Palestine.” I fantasize of standing on a busy corner holding up a sign or writing an urgent call to action in my company Slack. Maybe I’ll even change my profile picture.

Tomorrow, I will wake up, lying in bed, and thousands of Palestinians will be lying dead under blood-stained sheets. Maybe I shouldn’t sit so comfortably in the seat of empire tonight.

Ten years ago, I wrote an essay for my Open News Fellowship which critically assessed the Web Analytics industry’s proclamation that “the pageview is dead.” The essay got some traction online and was eventually used as a framework for analyzing a right-wing politician’s use of the “rapture” when criticizing Obama’s foreign policy. The gist of the argument, which borrowed from Jacques Derrida’s 1982 essay Of an Apocalyptic Tone Recently Adopted In Philosophy, was that doomsayers are primarily “concerned with seducing you into accepting the terms on which their continued existence, their vested interests, and their vision of ‘the end’ are all equally possible.” As Derrida concisely put it: “the subject of [apocalyptic] discourse [hopes] to arrive at its end through the end.” A decade later, I can confidently say that the pageview is very much still alive and that the leaders of the analytics companies who predicted otherwise are now very rich.

––

Yesterday, a collection of academic and industry leaders collectively signed a statement which issued this stark, apocalyptic proclamation:

Mitigating the risk of extinction from A.I. should be a global priority alongside other societal-scale risks, such as pandemics and nuclear war.

The statement implies that since AI is a “societal-scale” risk, governmental regulation on the scale of responses to other apocalyptic threats is required (note their blatant omission of climate change). By positioning themselves as “AI experts,” it also puts them in a natural position to help craft these regulations given the complexity of the technology. While they are purposefully vague on what an AI apocalypse might look like, most doomsday scenarios follow the concept of the “singularity” in which AI undergoes “a ‘runaway reaction’ of self-improvement cycles… causing an ‘explosion’ in intelligence and resulting in a powerful superintelligence that…far surpasses all human intelligence.” As the story goes, this superintelligence, no longer moored by its creators, begins to act in its own interests and eventually wipes out humanity. A particularly sophisticated and influential example of this narrative was published on the online forum LessWrong last year.

––

Earlier this month, an interesting document leaked which was confirmed to have originated from a Google AI researcher. The essay, entitled We Have No Moat, And Neither Does OpenAI, traces the rapid development of open-source alternatives to OpenAI’s and Google’s large language models (LLMs) over the past few months. It argues that the open-source community is much better equipped to push the forefront of LLM research because it’s more nimble, less bound by bureaucratic inefficiencies, and has adopted tools like “Low-Rank Adaptation” for fine-turning models without the need of large clusters of GPUs. As the author summarizes:

We have no secret sauce. Our best hope is to learn from and collaborate with what others are doing outside Google.

People will not pay for a restricted model when free, unrestricted alternatives are comparable in quality. We should consider where our value add really is.

Giant models are slowing us down. In the long run, the best models are the ones which can be iterated upon quickly.

For anyone who has been closely following the development of open-source tooling for LLMs over the past couple of months, these assertions are not particularly controversial. Each day brings a fresh crop of tweets and blog posts announcing a new software package, model, hosting platform, or technique for advancing LLM development. These tools are then quickly adopted and iterated upon leading to the next day’s announcements. In many ways, this rapid advancement mirrors the ‘runaway reaction’ at the heart of the singularity narrative.

––

If we understand apocalyptic narratives as a rhetorical sleight-of-hand, then we must ask the same questions of the AI industry which my previous essay asked of Web Analytics:

What then of the [AI] apocalypse and the prophets who giddily proclaim it? To what ends are these revelations leading us? What strategic aims and benefits are these claims predicated upon?

Given the arguments outlined in the leaked document above (and backed up by anecdotal evidence) we can conclude that the “existential threat” AI companies are most concerned with is their inability to profit from the nascent “AI boom.” Google is particularly vulnerable to this threat given its heavy reliance on search-related advertising which reportedly accounts for more thant 80% of its yearly revenue. Regulation, while absolutely necessary, could be shaped in a way to potentially stifle the rapid development of the open-source community by requiring complex security, privacy, or other restrictions thereby making it illegal (or at least cost prohibitive) for an individual to develop an LLM on their laptop. Since many “AI experts” directly work for, or receive funding from the companies that signed the letter, it stands to reason that the aim of this proclamation is to ensure that they have a hand in shaping the eventual regulations in a way which ensures their monopolistic domination of the AI industry.

––

I have a different take on the “singularity” which is informed by recently completing Palo Alto – an excellent history of American capitalism vis-a-vis the tech industry. The basic idea is that capitalists have long sought to replace employees with automation and, for those tasks they can’t fully automate, to make the workers performing them function more like machines. In this reading, the “singularity” is not achieved simply by making machines “sentient,” but by simultaneously turning humans into machines, effectively lowering the bar an AI has to jump over to achieve sentience; if you work in an Amazon Fulfillment Center, you are already functioning very close to a machine, and much of your work is probably focused on training the robots that will replace you. Keep in mind that the crucial difference between GPT-3 and ChatGPT was the addition of reinforcement learning from human feedback (or R.L.H.F) which involved underpaying and overworking Kenyan contractors to make the model “less toxic” (this exploitative process is a “joke” for AI researchers). That the AI industry’s development will likely coincide with a rise in factory-like conditions for those asked to train the models is no less of a disastrous scenario, but at least in this reading we can rightfully point the finger at the capitalists for the “end of the world” rather than AI. To modify William Gibson’s adage (which might not actually be his): “the apocalypse is already here it’s just not evenly distributed.”

TLDR; The source code is here.

As a part of its regular operations, Bushwick Ayudua Mutua makes regular use of short URLs and QR codes to share links to assistance request forms, volunteer sign-up forms, and other important information hosted online.

Like many small groups, BAM relied on bit.ly and a bevy other QR-code generation platforms which either cost a lot of money (bit.ly is $30/month) or harvest your data, exposing community members to potential privacy violations.

However, the underlying technology to create short URLs and QR codes is fairly simple and can be easily replicated using urlzap, python’s qrcode package, and GitHub’s Actions and Pages products.

I packaged these tools together into baml.ink which can be forked and reconfigured to create your own, fully-static short URL / QR code generation service.

The repository contains a human-readable/editable yaml file (named “baml.yaml” :p ) which looks something like this:

urls:

ig: https://www.instagram.com/bushwickayudamutua/

Here, “ig” is the path of short link, so you could then then share “baml.ink/ig” and it would point to “https://www.instagram.com/bushwickayudamutua/”. Each time this file is updated in the “main” branch, a GitHub Action is triggered which runs the urlzap GitHub Action which fetches the metadata for the long url and creates a static HTML file which includes the following meta tag in the “<head>” of the document:

<meta http-equiv="refresh" content="0; url=https://www.instagram.com/bushwickayudamutua/" />

This tells browsers to redirect the visitor to “https://www.instagram.com/bushwickayudamutua/”. By also including the metadata which is present on the source page, the short URL will appear normally when unfurled within messaging apps.

This static HTML file is then added to the “gh-pages” branch of the repository and deployed as a new page hosted by GitHub. You can see a full example of such a file here.

In addition to the link shortening process, an additional script is executed each time there is an update to the “main” branch which iterates through the list of URLs in “baml.yaml”, generates a QR code for the short URL, and writes it to a the “qr/” directory of the repository. These images take the format: “https://baml.ink/qr/{short_path}.png”. So, given the Instagram example above, the QR code would be hosted at baml.ink/qr/ig.png.

While this project must be hosted on GitHub to remain free, by centralizing all short URL and QR code generation into a single file, volunteers can use GitHub’s built-in code editor to easily add new URLs to “baml.yaml” and commit their changes, all without ever opening a terminal or cloning a repository. These changes are then automatically applied via GitHub Actions such that they should see their short URL and QR code go live within minutes.