Raven Chacon - For Four (Caldera)

I was first introduced to Raven Chacon at Dronefest last year when he performed a transfixing three hour noise set involving hyper-directional speakers. By manually adjusting their position, often at high speeds, he created disorienting effects of laser beams or overdriven insects buzzing around the circular space. I had never seen or heard anything like it and instantly became a fan.

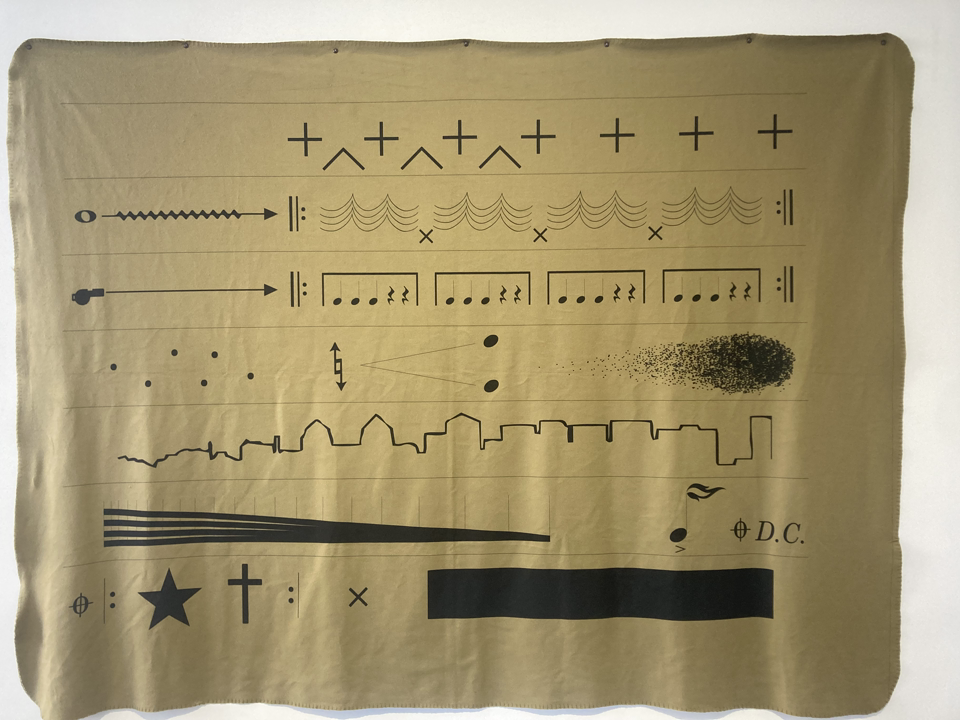

I’ve since seen one other of his compositions performed in which a quartet soundtracked one of his graphical scores for which he is well known.

This piece, currently on display at the Swiss Institute in NYC, trades a graphical score for the shapes of the rolling hills surrounding the Valles Caldera in New Mexico, which was formed 1.25 million years ago when a Volcano erupted (it’s also 15 miles from the location of the Los Alamos National Laboratory where the Atomic Bomb was developed).

In the piece, four singers are positioned around a small pond, each facing in a different direction. The “singers slowly rotate and while scanning the horizon line, singing the contour of the landscape.” The resulting sounds are haunting and beautiful, as the overlapping voices gradually shift between harmony and dissonance, evoking the tension between the tranquility of the landscape and the violence of the forces that created it.